Description

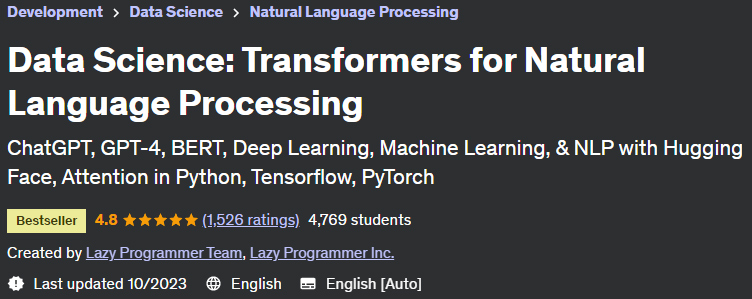

Data Science: Transformers for Natural Language Processing Name of the training course Data Science: Transformers for Natural Language Processing, published by Yodemy.Welcome to the Data Science: Transformers for Natural Language Processing course. Deep learning hasn’t been the same since Transformers hit the scene. Machine learning is capable of producing text that is essentially indistinguishable from human-generated text. We have achieved advanced performance in many NLP tasks, such as machine translation, question answering, implicating, named entity recognition, and more. Am. We have created multifaceted models (text and image) that can create amazing art using just a text message. We have solved an old problem in molecular biology known as “protein structure prediction”. In this course, you will learn very practical skills for using transformers and, if you wish, detailed theory on how transformers work and attention. This differs from many other sources that only cover the former. This course is divided into 3 main parts:

- Use of transformers

- Fine tuning transformers

- Transformer in depth

Section 1: Using Transformers In this section, you will learn how to use the transformers that have been taught to you. It costs millions of dollars to do this, so it’s not something you want to try on your own! We will see how these pre-built models can be used for a wide range of tasks, including: text classification (e.g. spam detection, sentiment analysis, document classification) named entity recognition summarization Machine translation text, questions and answers, text generation (believable). Masked Language Modeling (paper rotation) Zero Shot Classification This is currently very practical. If you need to perform sentiment analysis, document categorization, entity detection, translation, summarization, etc. on documents at work or for your clients – now you have the most powerful advanced models with very few. Lines of Code is one of the most amazing programs “zero shot classification” where you will see that a pre-trained model can classify your documents even without any training.

Part 2: Fine-Tuning Transformers In this part, you’ll learn how to improve the performance of the transformers in your custom data set. With Transfer Learning, you can leverage the millions of training dollars that have already gone into making transformers work so well. You will find that you can fine-tune a transformer with relatively little work (and low cost). We’ll cover how to fine-tune transformers for the most practical real-world tasks, such as text classification (sentiment analysis, spam detection), entity detection, and machine translation.

Part 3: Transformers in Depth In this part, you will learn how transformers work. The earlier parts are nice, but a little too nice. Libraries are fine for people who just want to get work done, but they won’t work if you want to do something new or interesting. Let’s be clear: it’s very practical. How practical is that, you might ask? Well, this is where the big money is. Those who have a deep understanding of these models and can do things no one has done before are in a position to command higher salaries and prestigious titles. Machine learning is a competitive field, and a deep understanding of how things work can be the edge you need to excel. We will also examine how to implement transformers from scratch. As the great Richard Feynman once said, “I don’t understand what I can’t create.” Suggested Prerequisites: Adequate Python coding skills Deep learning with CNN and RNN is helpful but not required Deep learning with Seq2Seq models is helpful but not necessary for the Deep Section: Understanding the theory behind CNN, RNN, and seq2seq is helpful. Updates to look forward to: More detailed tuning applications Deeper concept lectures Transformers running from scratch.

Who is this course suitable for?

- Anyone who wants to master Natural Language Processing (NLP).

- Anyone who loves deep learning and wants to learn about the most powerful neural network (transformer).

- Anyone who wants to go beyond the typical beginner-only courses on Udemy

What you will learn in the Data Science: Transformers for Natural Language Processing course:

- Apply transformers to real-world tasks with just a few lines of code

- Adjust transformers on your dataset with transfer learning

- Sentiment analysis, spam detection, text classification NER (Named Entity Recognition),

- Labeling parts of speech

- Create your own article spinner for SEO

- Produce believable human-like text

- Neural machine translation and text summarization

- Q&A (eg SQuAD)

- Zero shot classification

- Pay attention to yourself and understand the deep theory of transformers

- Run transformers from scratch

- Use transformers with Tensorflow and PyTorch

- Know BERT, GPT, GPT-2 and GPT-3 and where to apply them

- Understand encoder, decoder and seq2seq architecture

- Master the Python Hagging Face library

Course details:

At the beginning of the course chapters on 10/2023

Prerequisites of the Data Science: Transformers for Natural Language Processing course:

- Install Python, it’s free!

- Beginner and intermediate level content: Decent Python programming skills

- Expert level content: Good understanding of CNNs and RNNs and ability to code in PyTorch or Tensorflow

Course pictures:

Course introduction video:

Installation guide :

After extracting, watch with your favorite player.

English subtitle

Quality: 720p

Changes:

The version of 2023/3 compared to 2022/5 has increased the number of 42 lessons and the duration of 5 hours and 40 minutes.

Version 5/2023 compared to 2023/3 has increased by 1 lesson and duration of 8 minutes.

The version of 2023/8 compared to 2023/5 has increased the number of 4 lessons and the duration of 40 minutes.

download link

Password file(s): www.downloadly.ir

Size

6.04 GB